Elon Musk has famously said, “AI is far more dangerous than nukes.” His statement has some truth to it, and it has succeeded in raising our awareness of the dangers of AI.

As leaders, part of our job is to ensure that what our companies do is safe. We don’t want to harm our business partners, employees, customers, or anyone else we are working with. We can manage AI to reduce risk even before regulatory bodies step in to force us to do so.

Most industries have regulations to govern how we manage risks to ensure safety. However, in the world of AI, there are very few regulations applying to AI as it relates to safety. We have regulations around privacy, and those are extremely important to the AI world, but very few regulations around safety.

AI has the potential to be dangerous. As a result, we need to create ways to manage the risk presented by AI-based systems. Singapore has provided us with a Model AI Governance Framework. This framework is an excellent place to start understanding the key issues for governing AI and managing risk.

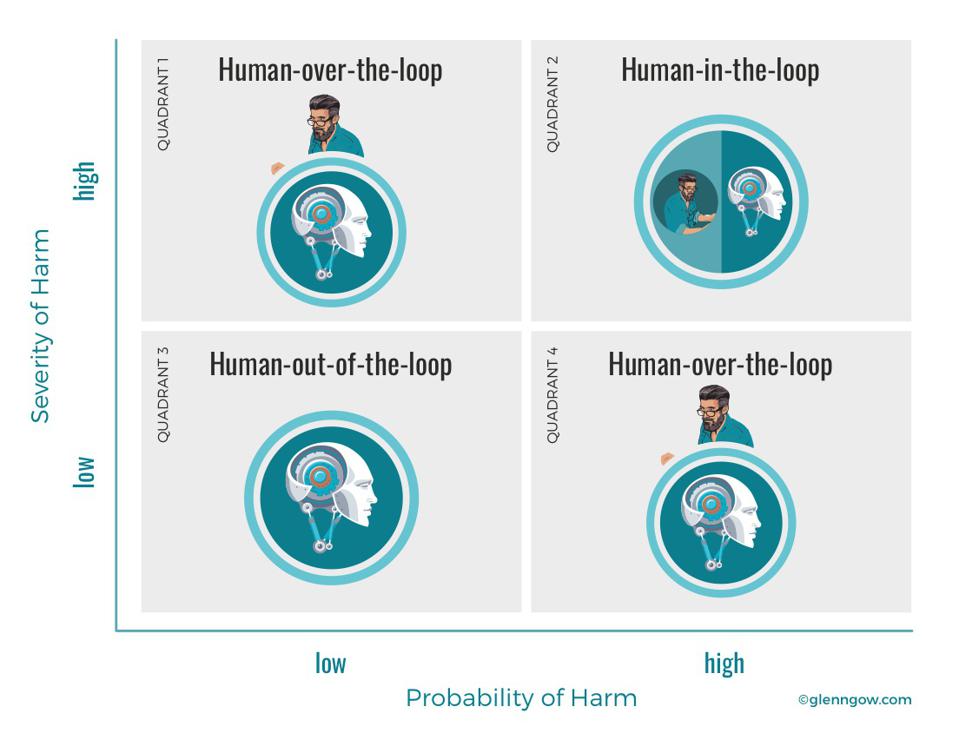

The two main factors in this framework consist of:

- The level of human involvement in AI

- The potential harm caused by AI

The first main factor: the level of human involvement

At times, AI will work alone, and many times, AI will work closely with humans in making decisions. Let’s look at three ways this can manifest itself.

Human-out-of-the-loop: This AI runs on its own, without human oversight. The AI system has full control, and the human cannot override the AI’s decision.

An example of AI where we are comfortable with the human-out-of-the-loop is for most recommendation engines, such as what song to listen to next or what piece of clothing to look at next on an e-commerce website.

Human-in-the-loop: This AI runs only to provide suggestions to the human. Nothing can happen without a human command to proceed with a suggestion.

An example of AI where we really want a human-in-the-loop is medical diagnostics and treatment. We still want the physician to make the final decision.

Human-over-the-loop: This AI is designed to let the human intercede if the human disagrees or determines the AI has failed. If the human isn’t paying attention, though, the AI will proceed without human intervention.

An example of where we want a human-over-the-loop system might be AI-based traffic prediction systems. Most of the time, the AI will suggest the shortest route to the next destination, but humans can override that decision whenever they want to be involved.

The second main factor: the potential harm caused by AI.

In thinking about managing risk, we need to ask about the severity of harm and the probability of harm. Let’s use a matrix to look at how this could work.

Quadrant 1: Human-over-the-loop

Probability of harm is low; Severity of harm is high

Example: you have important corporate data, but that data is not only protected behind strong firewalls, but it is encrypted as well. It’s unlikely that hackers can both penetrate your firewalls and decipher the encrypted data as well. However, if they do, the severity of that attack is high.

You will want a human-over-the-loop approach where your AI-based cybersecurity solution handles these types of attacks quite well. In certain circumstances, like discovering a particularly insidious brand-new attack, you will want humans to keep a close eye on what is happening. (See If Microsoft Can Be Hacked, What About Your Company? How AI is Transforming Cybersecurity.)

Quadrant 2: Human-in-the-loop

Probability of harm is high; Severity of harm is high

Example: your corporate development team uses AI to identify potential acquisition targets for the company. Also, they use AI to conduct financial due diligence on the various targets. Both the probability and severity of harm are high in this decision.

AI can be extremely helpful in identifying opportunities humans can’t see. Also, AI can provide excellent predictive models of how a potential acquisition will work out. You will want a human-in-the-loop for this type of decision. AI is useful for augmenting the decision, not making it.

Quadrant 3: Human-out-of-the-loop

Probability of harm is high; Severity of harm is low

Example: any recommendation engine that helps consumers make product-buying decisions. Many e-commerce sites will help a consumer find the products they are most likely to buy. Also, companies like Spotify will recommend what songs you might want to hear next.

For recommendation engines, the probability of harm is quite low, and the severity of looking at a shoe you don’t like or listening to a song you don’t like is also low. Humans are not needed.

Quadrant 4: Human-over-the-loop

Probability of harm is high; Severity of harm is low

Example: some AI systems can help with compliance audits. The probability of harm is high because the systems may not yet be perfect. Yet, the severity of harm is low because the company may be allowed to correct the non-compliance or suffer a small fine as a result.

Some compliance audits are more important than others. A human can decide where they should be involved depending on how important that particular compliance issue is to the company.

The Modern AI Governance Framework gives boards and management a starting place to manage risk with AI projects. While many other factors determine the best risk-management approach, this framework has the advantage of being very easy for the non-AI executive to help drive the best risk management approach for the company.

AI could be dangerous, but managing when and how humans will be in control enables us to reduce our company’s risk factors greatly.